Imagine the robots.txt file as your site’s silent guide in the world of websites and search engine tricks. It tells search engine bots where they can and can’t go. Creating this file correctly lets you decide how search engines explore and understand your website. In this guide, we’ll take you through simple steps to make a practical robots.txt file, letting you control your online space.

Why Create an Effective Robots.txt File

When you create a robots.txt file, you essentially play director with how search engines handle your content. If there are specific areas you want to avoid certain bots to explore, or if you want to ensure your site is crawled efficiently, this file becomes your digital remote control for the search engine experience.

For instance, let’s say you don’t want search engines snooping around your “Terms and Conditions” page. You can instruct the robots.txt file to block access to this page. Remember that some search engines might have already seen this page before, so this won’t erase it from their records.

Here’s an example of how you’d code this:

User-agent: *

Disallow: /terms-and-conditions/This simple code tells all bots (denoted by “*”) not to crawl the “terms-and-conditions” directory on your site. It’s like putting up a “No Entry” sign for search engines specifically for that page.

Checking Your Robots.txt: Is It Doing Its Job?

To ensure your robots.txt does its job, visit your website and extend your web address with “/robots.txt” in the browser’s address bar (e.g., www.yourwebsite.com/robots.txt).

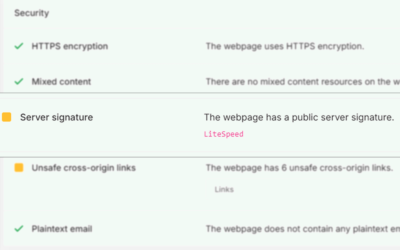

This action will display the contents of your robots.txt file. Look to confirm that the rules you’ve set are accurately reflected. Additionally, leverage tools like Google’s Robots.txt Tester within the Google Search Console to validate and test your file.

If all looks well and aligns with your intentions, your robots.txt is successfully directing search engine bots according to your specifications. Remember that changes may take some time to reflect in search results, so be patient as search engines adapt to the updated instructions.

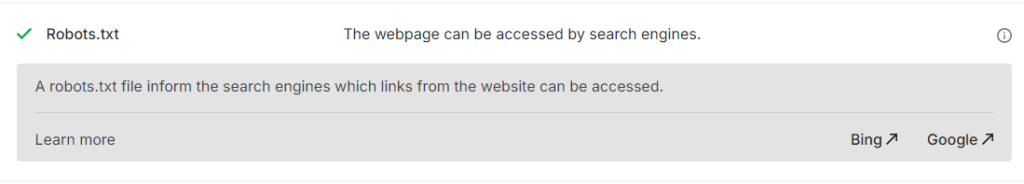

Using Our Free Tool

Consider using our tool for a more detailed insight into your website’s robots.txt. It provides additional information and offers suggestions on optimizing your file for better performance.

How to Create an Effective Robots.txt File?

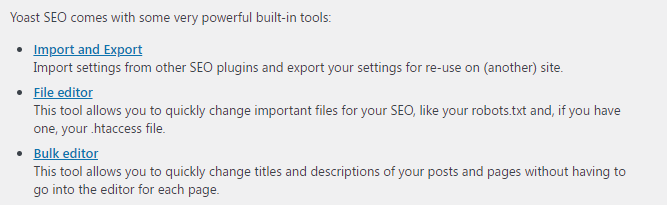

For those cruising through the digital world with SEO plugins on their website CMS, managing the Robots.txt File becomes easy. These handy tools simplify the implementation process; in some cases, they may even create the file for you.

Take Yoast, for instance – within its plugin, under “Tools” and then “File Editor,” you can easily spot your Robots.txt. However, if you navigate non-CMS platforms, fear not – manually creating it is a straightforward process. Let’s walk through the steps.

Step 1: Access Your Website’s Root Directory

Find the main hub of your website, where all the essential files hang out.

Step 2: Create a New Text File

Whether you use File Manager, or FileZilla, or create the file from your computer is the same process. Create a new file.

Step 3: Name it “robots.txt”

Save this text file with the name “robots.txt,” keeping it all in lowercase.

Step 4: Understand Syntax and Directives

Get to know the important parts of this digital language, like “User-agent” and “Disallow.”

Step 5: Example Structure

Keep things straightforward, like this example:

User-agent: *

Disallow: /private/In this example, every bot (denoted by “*”) receives a clear message to steer clear of the “/private/” directory. Simple and effective!